- Advertising

- Bare Metal

- Bare Metal Cloud

- Benchmarks

- Big Data Benchmarks

- Big Data Experts Interviews

- Big Data Technologies

- Big Data Use Cases

- Big Data Week

- Cloud

- Data Lake as a Service

- Databases

- Dedicated Servers

- Disaster Recovery

- Features

- Fun

- GoTech World

- Hadoop

- Healthcare

- Industry Standards

- Insurance

- Linux

- News

- NoSQL

- Online Retail

- People of Bigstep

- Performance for Big Data Apps

- Press

- Press Corner

- Security

- Tech Trends

- Tutorial

- What is Big Data

Modern Storage Technologies in 2020: What You Need to Know

Fast data volume growth and people's habit of consuming increasingly more online content encourage storage producers to continually innovate. What are some of the new technologies, and what are their characteristics? Let's take a closer look.

Why the Need for Innovative Storage Technologies?

First, a few numbers to put the discussion in context. Worldwide, 4.3 million videos are being watched on YouTube, 400 hours of new videos are added, more than 474,000 tweets shared, and there are over 3.5 billion searches on Google - every minute. In a 2018 study, IDC reported that there were around 33 zettabytes of data in the world. They estimate that by 2025 this number will grow to 175 zettabytes of data. That's so much data that it would take around 1.8 billion years for a single user to download all of it using current internet speeds.

4.333 billion people are internet users at the moment, an increase of 8 percent from 2018. Out of them, 3.534 billion are social media users, up 9 percent from last year. More than 3.463 billion people use social media on their phones, an increase of 7.8 percent from 2018.

It’s no wonder that the need for fast data access and high data throughput storage solutions is bigger than ever – and growing. That's why there's a rapid adoption of new technologies that complement modern storage approaches.

New Storage Technologies

One of these is the storage-class memory (SCM), or non-volatile random access memory (NVRAM). NVRAM can retain data even after the electrical power is removed. Since each byte has its memory address, NVRAM is very fast, especially in comparison with dynamic RAM (DRAM) which has page-level access, or with standard NAND that has block-level access.

There are several types of NVRAM technologies. The oldest one, static RAM (SRAM), has a more complicated structure than DRAM, with at least six switches and more active connections. The switches are configured in such a way that the chip will retain its state without refresh, making the SRAM very suitable for reads and low latency applications. However, this makes it harder to write and more prone to degradation over time.

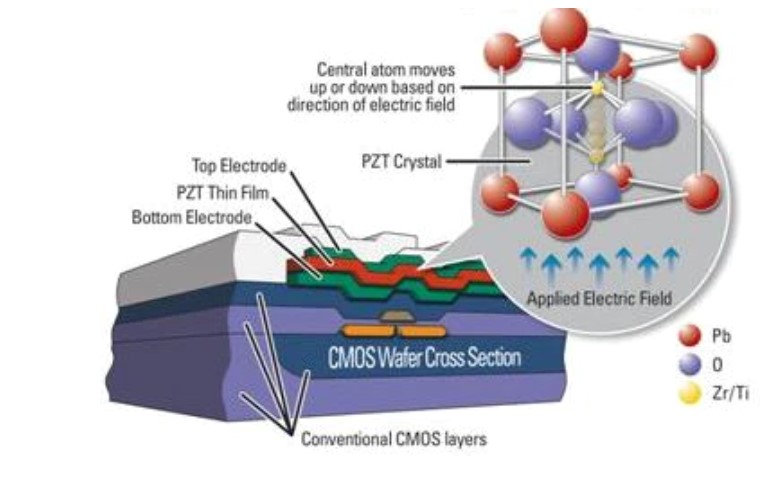

Newer non-volatile memory technologies, such as Ferroelectric RAM (FRAM) and Magnetoresistive RAM (MRAM), have better specs and use cases than SRAM. FRAM uses a ferroelectric capacitor architecture as a storage element. There's an electric dipole that moves and switches polarities with an external electric field. Temperature and free electrical charges cause the dipole to loosen up over time and to cause failures eventually.

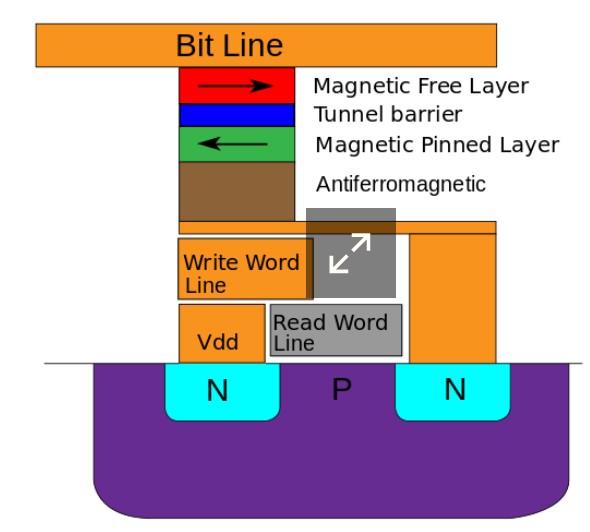

Compared to FRAM, MRAM does not require any type of motion because it is based on a ferromagnetic material magnetic state at a point-in-time, so it does not wear out in time, and it is not affected by temperature. MRAM also has faster access and cycle times.

Other technologies are on the way, like phase-change RAM (PRAM), SONOS, resistive RAM (RRAM), or Nano-RAM. Only time will tell which ones are reliable and cheap enough to mass-produce and hit the shelves.

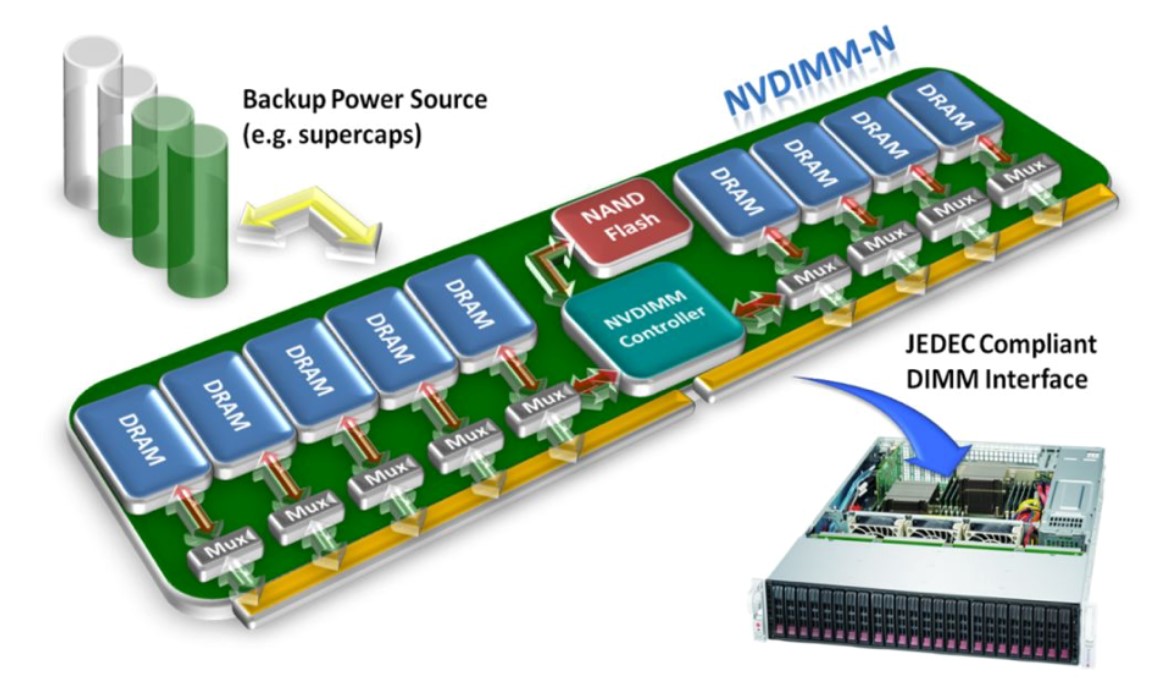

NVRAM also comes in the form of NVDIMMs, although some NVDIMM technologies such as NVDIMM-N do not have a persistent RAM technology onboard. It only combines DRAM with NAND chips on the same board and copies data from DRAM chips to the flash in case of electrical power loss.

On the other hand, NVDIMM-P will use some persistent memory technology described above and a complex controller and buffer scheme. Release time will probably be somewhere in 2020, likely along with the DDR5 timeframe. Use cases that can benefit from the advantages of persistent memory are applications that use fast transaction logging and in-memory computing, like databases.

Fast technology development and affordability might become a reality due to market leaders, such as Intel and Everspin, who are currently focusing on this tech. For now, the price is quite steep; Intel is selling the 128GB NVDIMM module at around 570 USD, the 256GB at 2100 USD, while the 512GB can be acquired at the astounding sum of 6700 US dollars. Hopefully, the prices will drop along with technology adoption.

Another new technology is NVMe over Fabrics or NVMeOF. This protocol allows transferring NVMe storage commands between servers over Infiniband and Ethernet using the RDMA technology. The RDMA protocol enables different applications to transfer memory information directly, bypassing the OS and CPU, having very low latency and saving computing resources. With NVMeOF, this can be done over Ethernet cables by connecting nodes in high-performance computing or distributed storage scenarios. NVMe protocol can be transported over various media, like FC, Infiniband, Ethernet, or NextGen Fabrics.

Another new technology is NVMe over Fabrics or NVMeOF. This protocol allows transferring NVMe storage commands between servers over Infiniband and Ethernet using the RDMA technology. The RDMA protocol enables different applications to transfer memory information directly, bypassing the OS and CPU, having very low latency and saving computing resources. With NVMeOF, this can be done over Ethernet cables by connecting nodes in high-performance computing or distributed storage scenarios. NVMe protocol can be transported over various media, like FC, Infiniband, Ethernet, or NextGen Fabrics.

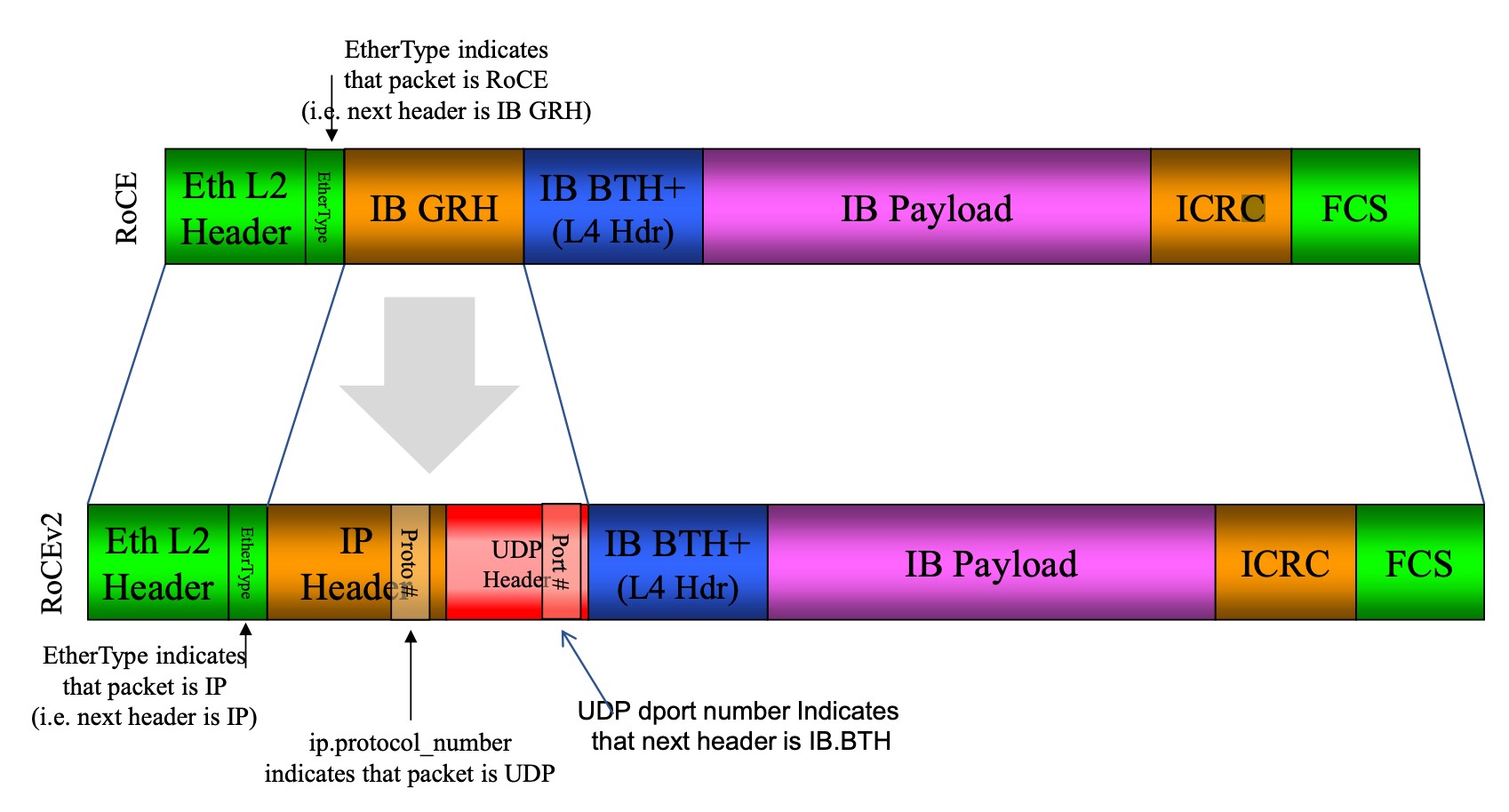

We can see the adaptation of RoCE (running on Infiniband) to RoCEv2, with each header described:

Figure 1. RoCE vs RoCEv2 Frames

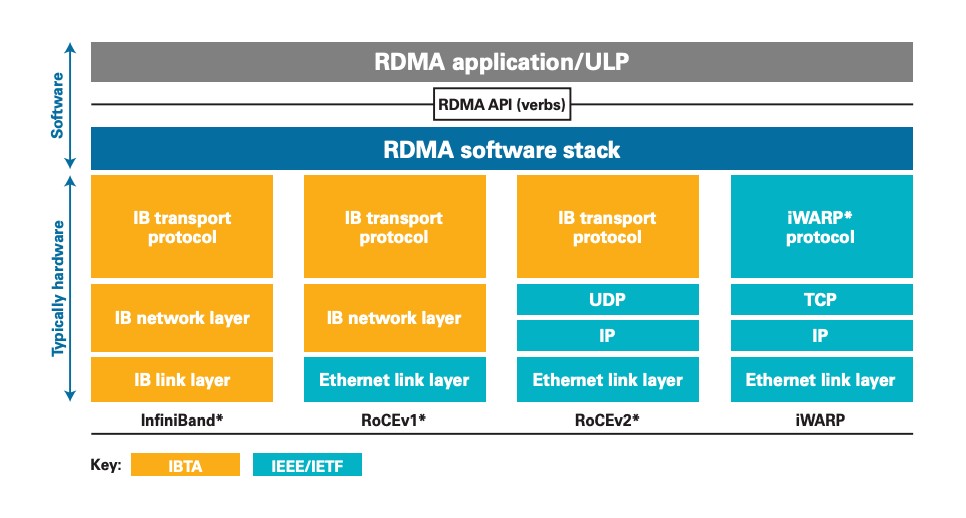

The most popular NVMe over Ethernet implementations are RoCEv2 and iWARP. Although iWARP (not an acronym) may sound like an upgraded warp drive from Star Trek, it is not that fast, compared to its cousin, RoCEv2, due to its complexity and added overhead. RoCEv2 is lighter, faster, but lacks the robustness of iWARP, given that iWARP uses TCP, while RoCEv2 uses UDP frames.

Figure 2. RDMA Transport Protocols Layers

Both iWARP and RoCEv2 are routable protocols; thus, the use case scenario can extend more, even though at the cost of a slightly higher latency and unreliability when using UDP. To make RoCEv2 fully reliable, however, reliability checks can be added inside the RDMA protocol stack in the future.

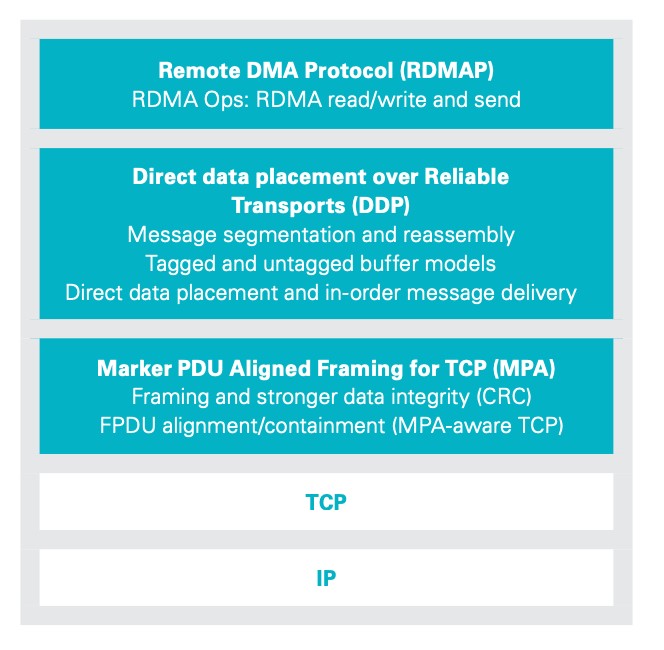

Figure 3. iWARP Protocols

Although both RoCEv2 and iWARP protocols need a physical adapter, or RNIC (RDMA NIC), installed on each host in the network, iWARP can be also be tested out with regular Ethernet NICs using Soft-iWARP. You can clone it from GitHub and try it yourself.

What Is the Impact of These New Technologies?

Both SCM and NVMeOF technologies helped pave the way for some exciting new approaches in storage.

One of these is computational storage, which takes the old storage system idea and turns it the other way around: instead of moving the data to the processing node, the computation is done much closer to where data is stored, thus saving significant host CPU resources and time. Although a storage fabric comprised of fast media and NVMe is blazing fast, computational storage systems kick things up a notch. CSS relies on in-situ processing of data by adding an ARM processor into the controller of an NVME SSD. Data still needs to travel through to the CPU, but this happens much faster due to the Common Flash Interface, or CFI.

CFI is a standard introduced by JEDEC to allow storing data sheet params located in the device. This information is stored in the form of CFI tables, which can be queried by the application that requests the data.

For example, NGD Systems use an ARM Cortex-A53 processor embedded into the controller of an SSD disk. Samsung uses a Xilinx FPGA with ARM cores to offload the CPU with tasks like compression, deduplication, encryption, or even analytics. Eideticom developed a NoLoad accelerator; one of those fit into a 2.5in U.2 NVMe SSD form factor, but instead of flash chips, it has a Xilinx FPGA accelerator and some memory. This approach takes advantage of keeping the current disk setup and using the PCIe bus to offload jobs like erasure coding, raid, data compression, and deduplication; if you ask me, all the goodies you would want from a swift Storage Santa. Eideticom & Nallatech claim that one NoLoad Gen3x4 device could compress and decompress data using zlib algorithm at over 3 GB/s. Impressive, right?

A second approach is intent-based storage, a new interesting storage solution with great potential. Augmenting the idea behind computational storage, companies like Datera and Hammerspace have developed some great proprietary solutions.

Hammerspace converges multi-cloud environments under the same hood, using a global filesystem on top of the actual data. Each piece of data receives its own metadata that tells the global filesystem where the information is, how to access it, and details about it, through a metadata engine. Once the metadata index is created, a machine learning algorithm comes into play, analyzing metadata through analytics and offering more details about the data, while also continually improving file access and performance.

Datera is another crucial player in the software-based data services industry. They offer a solution similar to what Hammerspace has, but they also provide a way to interact with the storage environment via a GUI or API, according to customer needs. Depending on customer resource requirements, a complex QoS implementation on the storage infrastructure can add or remove compute nodes automatically, move data to hotter or colder storage tiers, making on-the-fly scalability possible.

Conclusion

The data industry is evolving rapidly and can be a vicious environment for companies that don't keep up with the trends or do not invest properly in the development of new products. However, the same fast-paced evolving industry encourages the emergence of new hardware technologies like storage-class memories, NVMe over Fabrics, and new software storage services aiming towards computational and intent-based storage solutions.

About the Author

Catalin Maita is a Storage Engineer at Bigstep. He is a tech enthusiast with a focus on open-source storage technologies.

Readers also enjoyed:

Halloween Quiz Results: Sysadmin "Specs" & How to Trick or Treat Them

Leave a Reply

Your email address will not be published.